case study 2020 4 min read

HP OMEN Challenge 2019

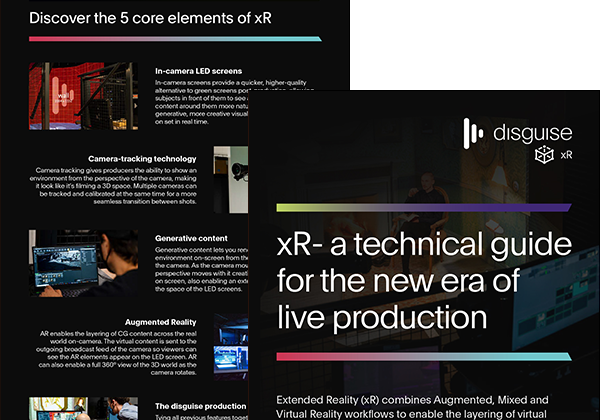

disguise gx range and xR workflows turn OMEN Challenge esports tournament into a unique and immersive experience.

When the OMEN Challenge 2019 was held in London in September 2019, disguise gx 2c and gx 1 media servers powered the real-time generative Notch content that accompanied the gameplay. The esports event was the first to be broadcast using disguise xR workflows, which combined Augmented, Virtual and Mixed Reality technologies.

The fifth annual OMEN Challenge was held with the support of OMEN by HP, with eight players competing for a prize pool of $50,000. The Counter-Strike: Global Offensive tournament featured 1-on-1 matches and four-player deathmatches. The event went above and beyond the traditional esports tournament format, offering a battle arena with a unique and immersive stage and content.

The project, run by creative media agency AKQA, was realised in collaboration with Scott Millar and Pixel Artworks, who designed and developed a bespoke pipeline toolset using disguise, Notch and TouchDesigner, to create a never-before-seen broadcast with AR, Mixed Reality and live show elements, all streamed live on Twitch and other platforms.

"HP wanted to do something different with big LED video walls, AR and MR tricks that would look good to the live and broadcast audiences,” notes Technical Producer Scott Millar, working alongside Pixel Artworks to power video for the event using disguise. Real-time MR content was made in Notch, with data coming in from the game to power the info graphics. The final output was carried out in disguise and output to the LED video walls.

Mixed Reality was used in two ways during the show, Scott explains. The first was in using a Notch-generated studio environment, which allowed the casters to be transported to a different world in-camera. The world could also be rendered from the game engine to place the casters directly into the map. The second use was to allow players and interviewers to “step into” the game world and replay the biggest moments. Using a Steadicam and rendering the game engine into both the LED and virtual worlds, the players could see themselves in the game, and describe their best moves.

“Working closely with the developer of the OMEN game and amateur map designers, we built a custom solution to make the real-world set, game data and content align with the digital equivalent. We essentially created a virtual studio and OMEN set which, through camera, would seem as though a real person was in the game,” explains Oliver Ellmers, Interactive Developer with Pixel Artworks. This was achieved by using xR to enlarge and overlay content in-camera while using MR to track the real-broadcast camera world, including camera tracking, so the live broadcast cameras were aligned with the digital cameras in the game.

The production team overcame a number of challenges for this project, the main one concerning the sheer novelty of the technologies in use. “What we created had never been done before in this way,” Oliver points out. “On top of that, the game was live streamed and super fast: These were some of the best OMEN players in the world, and there wasn’t room for error.” They had to pull data out of live matches fast, to visualise for the broadcast while implementing AR and MR designs in-camera for the viewers. Since they worked with cutting-edge technology, there was a lot of testing required to fulfil the brief.

The show used four disguise gx 2c media servers, supplied by 80six and Gray Matter, as well as two gx 1 media servers, acting as master and understudy. To power the game rendering and control, plus manage the game data, six TouchDesigner machines were used with custom code written to control the game engine.

“We needed to work with a framework that gave us the flexibility to make changes quickly, and finding those things together is pretty rare,” says Oliver.

“The flexibility of disguise, the integration of other systems and the ability to see multiple inputs in one area gave us opportunities that other products don’t offer. This is one of the reasons disguise was chosen.”

Oliver Ellmers, Pixel Artworks

Many functions and features of disguise were particularly helpful for this project. Specifically unique was disguise’s frame sharing over the network, a feature Oliver calls “a complete game-changer. We could make revisions quickly and so much more efficiently than before, and syncing systems was never so easy.”

Due to the flexibility of the disguise solution, the team were able to achieve 3-way communications among Notch, TouchDesigner and disguise. This worked seamlessly, and with the real-time workflow they were able to make fast iterations if any issues came up. “disguise was the only product that had the ability within its framework to make this possible,” Oliver declares.

Equipment

Credits

- Event organiser

- Technical producer

Scott Millar

- Production design

- Interactive developer

Oliver Ellmers

- Live broadcast

- Notch programmers

Marco Martignone & Lewis Kyle-White

- LED & disguise server suppliers

- disguise servers and operators

GrayMatter Video

- Images