blog 2021 5 min read

Going for gold – in conversation with disguise CTO, Ed Plowman

Following the public launch of xR with our latest r18 software, we caught up with disguise CTO Ed Plowman to learn more about his years developing a film-ready virtual production solution, and what disguise are planning for the future.

What first inspired the idea for xR?

Back at IBC 2018, disguise went public with what we’d considered to be a bit of a science experiment at the time. We’d developed a means of using a game engine paired with physical LEDs to create dynamic, real-time virtual environments, live on set. That can be seamlessly digitally extended and used to entirely replace the use of green screens depending on the production needs.

We received a lot of requests to get access to our builds from the show. So, we set up an insider programme to allow the community to get started and help us refine the system. We’ve been developing it in the years since then.

With the addition of the Epic Mega Grant we were able to advance our roadmap to accelerate integration with Unreal Engine and bring the creative capabilities of Unreal Engine to virtual production.

Why do you feel now is the time to launch r18, and go gold?

Last year, even with our xR platform still in beta we became a major industry leader in immersive real-time production as our software and hardware were used to power over 200 virtual projects across broadcast, corporate events, music, commercial shoots, film and episodic TV.

Then, we were recognised as one of the Financial Times’ top 1000 fastest growing businesses for 2021, and just a few months ago, announced that we will be supported in our growth by The Carlyle Group, who took a majority stake in the company, and by Epic Games who took a minority stake.

With everything we learned from our partners, and the new resources, we could accelerate a number of activities, including adding the finishing touches to r18. With all the improvements we were able to make, we knew we were ready to take xR mainstream.

What new features are you most excited about with r18?

I love the cluster rendering system we developed in partnership with Epic Games as well as select collaborators from our user community. We talked to them about how we wanted to unlock the limitations of a virtual production studio by scaling out real-time content to an infinite level.

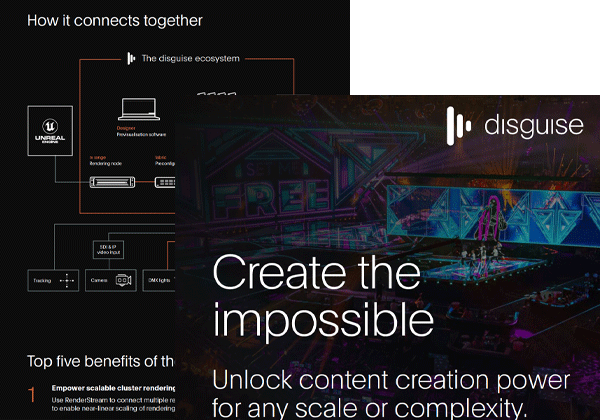

Working together, we created our cluster rendering environment – which allows you to flexibly partition renders across multiple machines. It's not just simply a matter of taking the picture and having it be mosaiced across multiple machines. We can do true channel-based rendering: achieving real-time content of the highest quality, detail and frame rate at an almost infinite scale.

Another of my favourite features is our spatial calibration in r18. It’s actually a dividend from a previous R&D project that we did around OmniCal, which was our technology for projection mapping. There's a lot of technology in OmniCal which relates to mapping 3D space accurately, in order to put multiple projections into the same scene and have them appear like a continuous image.

For r18, we've repurposed that technology and brought it into the virtual production environment with LEDs. We’ve applied the same kind of principles to the spatial calibration and location of the LED walls in order to make it easier for you to move around in that environment, while still keeping the correct perspective. It also makes it easier to match up images against digital set extensions, when you do wide shots that come out of the LED volume.

What has the reaction been like so far from the virtual production community?

It’s been amazing what positive reactions we’ve had to r18. We’ve noticed a lot of engagement from the community around the fact we've introduced the ACES workflow into the product.

What we’ve seen is that users that have picked this up the fastest have been doing backdrop shots, but now they've made that investment, they're also starting to extend out into doing more creative things with the technology. They’ve engaged with the cutting-edge stuff really quickly, and we’re so excited to be a part of enabling that.

Can you tell us more about what you have planned for the future?

I can’t say much – obviously we have more enhancement planned for our integration with Unreal including features for fixed installs and live show verticals. We are particularly excited about the upcoming Unreal Engine 5.0 as well! I can also reveal we've got funding from both the UK government and EU to develop R&D to further improve colour calibration and dynamic colour correction.

One of the problems with LED surfaces is as you move around them, the colour reproduction changes because obviously, an LED wall is projecting light in one direction. As soon as you come off-axis, the chromatic reproduction changes. What we’re doing is developing technologies that allow us to correct for those environments, and do that in real-time for dynamic, spot-on colour reproduction.

That’s just another example of how our team is always looking to develop new ways to make xR and virtual production more powerful, and to allow filmmakers and creators to realise their visions – that’s what really excites us.