blog 2019 3 min read

Q&A with Malfmedia’s Michael Al-Far on AR workflows on Yin Trend Music Night project

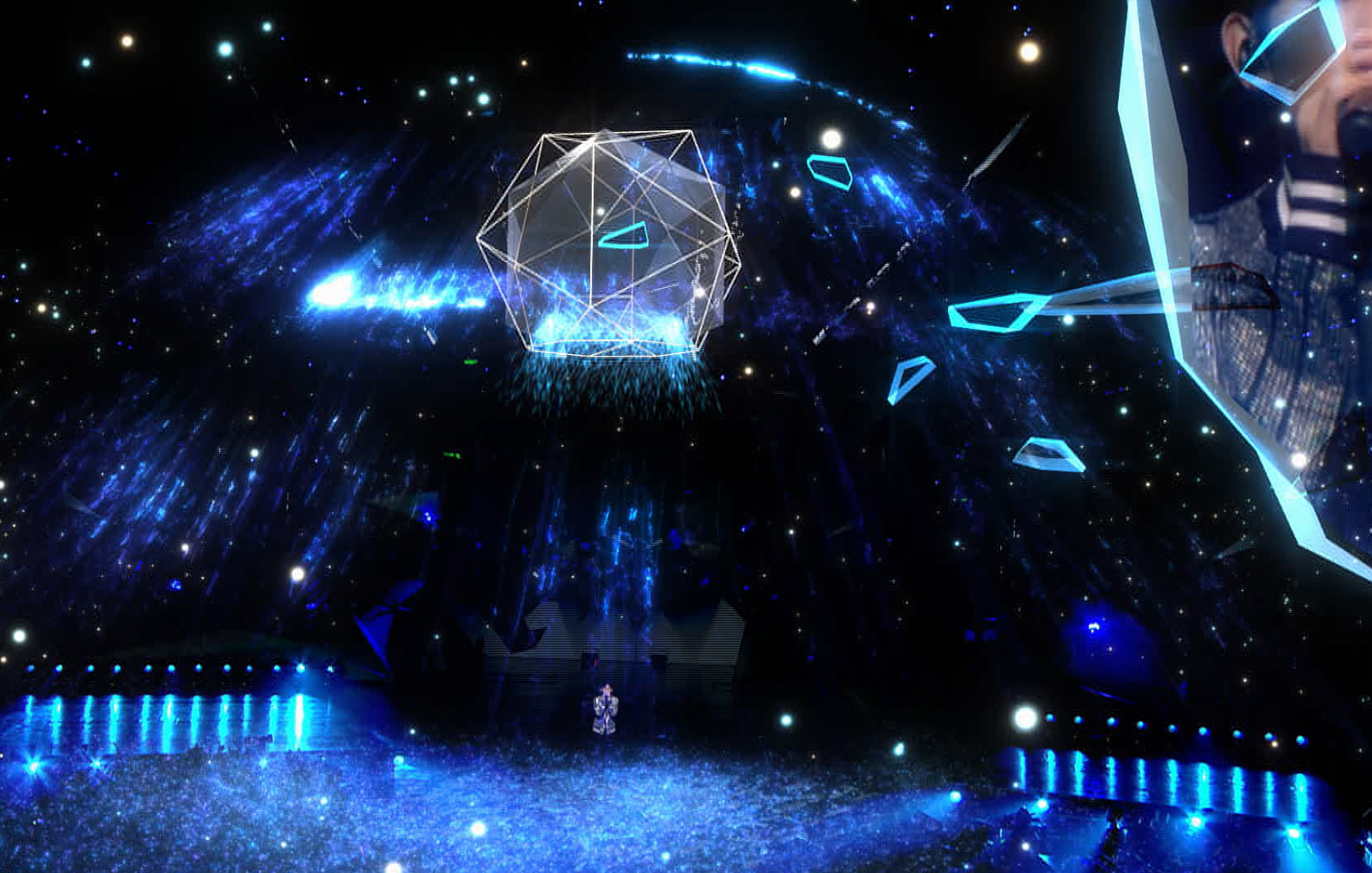

In January 2019, the Yin Trend Music Night project was hosted in Nanjing, China. MalfMedia utilised a workflow of Notch and a disguise gx 2 server, alongside stYpe camera tracking to power the video projection. We sat down with Michael Al-Far of Malf Media to ask him more about the workflows behind this event.

“Tecent’s YIN concert was designed to inspire young people to explore the world and make changes. As such, we decided to take a leap and join the technological revolution for live event broadcasting. For the first time, we used the gx 2 disguise server together with Notch software to create an unprecedented multicam AR experience for online viewers. The successful combination of disguise media servers and Malfmedia’s design skills, brings augmented reality experience for this type of broadcast to the next level.”

Sizhe Huang, Guan Yue International

Could you tell us more about the concept behind Yin Trend Music Night?

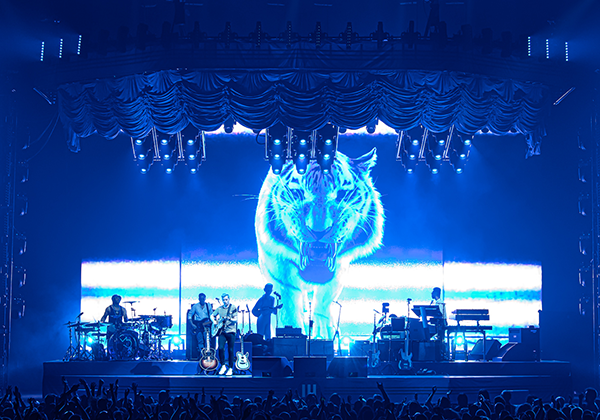

Michael: Tencent’s new year show, Yin Trend Music Night, was aimed at inspiring youth to get out into the world and make a change. This event was available online only with popular Chinese pop stars Hua Chenyu, Li Yuchun, Wu Qingfeng, Kris Wu, and Rocket Girls 101 performing at the event.

What workflows did you choose to power this event and why did you choose them?

Michael: We use a combination of Notch and disguise for our Augmented Reality (AR) projects. Notch allows us to combine camera tracking with the real-time rendering needed for AR, and has a huge catalogue of effects that can all be combined with the camera tracking. The disguise gx 2 allows us to embed the Notch block and use one server to process and combine camera tracking and real-time render engine.

Was there anything innovative in your workflow or design process for this project?

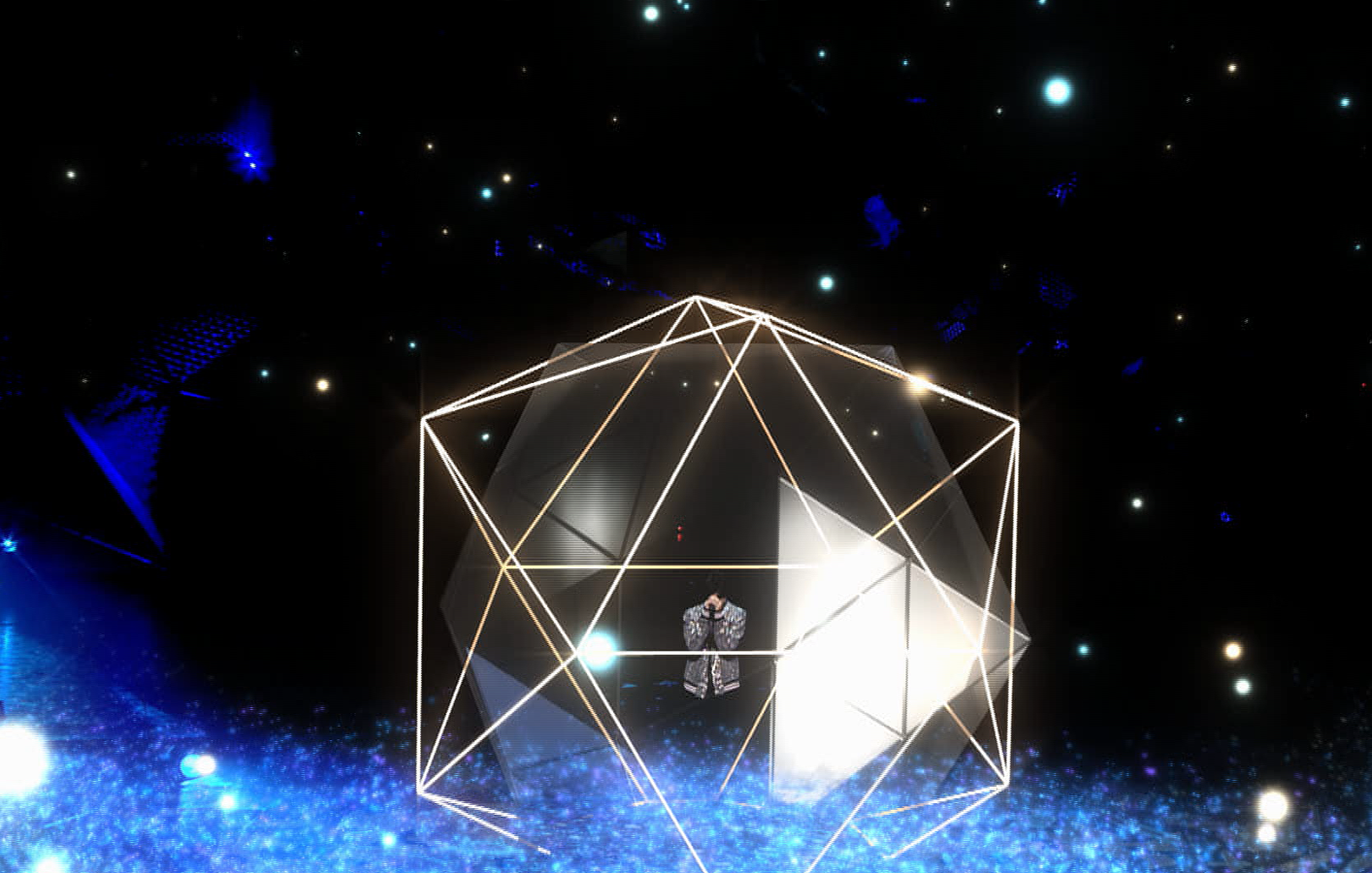

Michael: Every time we use the disguise software and hardware we find little improvements - either in asset design, asset management or timeline programming. For this event, we successfully integrated real-time reflection in the crystal which added to the seamless integration of the AR graphics into the real world environment.

Another novelty on this show was the AR fire we created where we managed to set the stage on fire in AR, which existed of over a million particles all running at a steady frame speed high enough to allow for smooth camera tracking.

What other integrations did you use and what roles did they play within the workflow?

Michael: Hans Cromheecke and Maarten Francq where in charge of integration and programming. They used a clever system of midi and OSC controls to have interaction with the keyframed timeline, and other elements in the design like the transparency of the crystal and the speed and agility of the fire. They could intervene in the design, in real time by making use of disguise’s built-in communication lines.

How was the support from disguise?

Michael: The support we received from disguise APAC was above and beyond. MalfMedia has been at the forefront of this exciting new adventure for disguise where we use the media servers not only for linear play out of video and projection but also for successful implementation of camera tracking. Our relationship with disguise goes a long way in this field and has led to some considerable success in the past and will lead to even more success in the future.