blog 2021 5 min read

The journey to extended reality: A conversation with the disguise development team

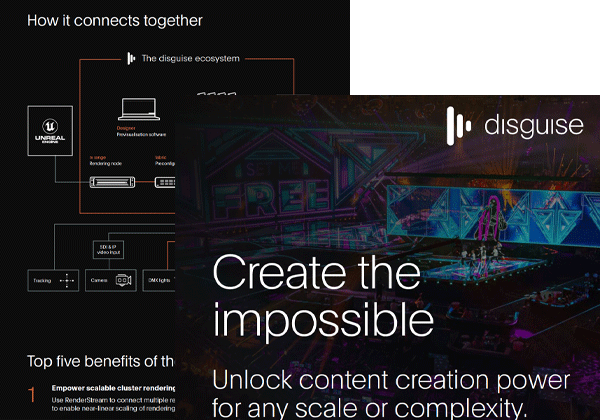

Extended Reality (xR) started off as a small beta program, growing into a fleshed-out suite of features that make virtual production, cluster rendering and RenderStream accessible to everyone. We spoke to our disguise development team to find out more about their three-year journey building xR.

Our work in Extended Reality (xR) started with one question: how can we deliver scalable real-time content anywhere and everywhere? Since its early days in 2018, we have come a long way in our goal to answer that question. Together with our user community, we have built a reliable and robust system to generate real-time, scalable content.

The disguise r18 software has opened up public access to xR, enabling new opportunities in immersive real-time production at scale, across live entertainment, film and episodic TV, corporate events and TV and sports broadcast industries. The release includes the most advanced integration of Unreal Engine to date and much-anticipated features such as cluster rendering and the ACES colour pipeline.

As the technology and its programming demands grew, so did our team of disguise developers. Three key players in the development of xR have been:

- Chief Software Architect, Tom Whittock

- Global Head of Software Engineering, Col Rodgers

- Senior Software Developer, James Bentley

Here was their story:

What was the goal of r18?

Tom Whittock: We’ve worked hard to consolidate our workflows so that we can help bring scaleable, real-time content to more live and streamed events and film productions. xR is now fully supported by disguise, with a full suite of calibration tools and an array of different ways to generate, mix, and output 3D spatial content.

Col Rodgers: One thing we’ve seen in our community of users is the desire for increasingly complex visual fidelity, which until now have outweighed the capabilities of even the most powerful GPUs. To solve this issue, we teamed up with Epic Games and their nDisplay system to create our cluster rendering system. This piece of tech offers the ability to scale content quality linearly by adding new render nodes. There’s now no need to compromise content quality for performance.

James Bentley: We have also introduced RenderStream asset launcher features, which allow you to launch, monitor, stop and control render nodes from within the disguise UI. This saves time on-site and allows operators to have more control over their scenes with less manual intervention.

Who is xR for?

Col Rodgers: Everyone! While a lot of the concepts will feel familiar, this is the first step in moving disguise into a much bigger market, so there are additional complexities. But, as ever, with new systems comes new training. Our new training materials and our amazing support team will help with this transition.

James Bentley: xR is for anyone who wants to create large-scale, immersive visual experiences. The new, user-friendly workflow replaces the complicated maths and manual calibration steps of the previous version with fast, graphic and intuitive tools that make calibration and troubleshooting much simpler.

Tom Whittock: xR is just another set of tools which people can integrate in a variety of productions for all sorts of use cases we haven't thought of yet. The best bit about working at disguise is seeing the contortions people put the software through to achieve amazing unexpected results.

From L-R: Col Rodgers, Tom Whittock, James Bentley

What challenges can xR solve?

James Bentley: Virtual production can be extremely technical due to the broad range of technologies involved. xR makes each process simple, automated, reliable and clear. That means, instead of relying on arcane knowledge of complex systems, users can pick up the skills far more easily. The sheer power of the system means that directors, videographers and designers can let their imagination—rather than technical limitations—lead their production.

What obstacles have you encountered in the development of xR?

James Bentley: One of our largest development challenges was the sudden need for virtual production resources in the wake of the pandemic. We had to rapidly bring features that had been science experiments out into a show-ready state in record time. Luckily for us our users are incredibly knowledgeable and motivated to work with us to reach this point. We can't thank them enough for working with us to create amazing new features.

How did partnering with the user community impact the development of xR?

James Bentley: User feedback has been integral to the development of xR. We worked directly with many of the largest studios in the xR world, listened and implemented their feedback and pushed the software to be even more responsive to user needs.

Col Rodgers: Our software is incredibly complex - it’s a collection of independent tools. As such, the beta testing groups helped us identify bugs in an array of use cases, so we could make the software and workflows robust. We also listened to all the feedback on usability which helped shape the end output.

What can we expect from disguise software in the future?

James Bentley: The cluster rendering system has laid the groundwork for more modular set-ups to come. At disguise, we will continue to build on the system to keep on giving our community of users the tools they need to create their vision. We couldn't be prouder of the new software release and we're really looking forward to the continuous development of great features in r18 and beyond.

Col Rodgers: We’re concentrating on hitting new markets as well as improving and tweaking the system for our existing markets. In the upcoming releases we will use the new features as foundations for even greater things.

Download r18 here, or to find out more, read our CTO’s thoughts about the new release.